Hiep Nguyen

October 15, 2024

•

20 min read

Integrating Amazon DynamoDB with Retool enhances your inventory management system by providing a scalable, high-performance NoSQL database solution. This blog explores how to design and implement DynamoDB for your Retool application, focusing on defining access patterns, leveraging schema flexibility, and understanding pricing models to optimize performance and cost.

Amazon DynamoDB is a fully managed, serverless NoSQL database designed to handle massive amounts of data with low latency and high availability. Unlike traditional relational databases that rely on structured schemas and SQL queries, DynamoDB embraces flexibility and speed, making it ideal for real-time applications.

Relational databases were built for an era when storage was expensive, prioritizing minimal data storage and CPU-heavy queries. SQL allows complex data relationships to be built on-the-fly, but as data grows, this process can become slow and resource-intensive.

In contrast, NoSQL databases like DynamoDB are optimized for the modern era, where storage is cheaper and speed is critical. DynamoDB pre-builds answers to defined questions across the dataset, providing fast retrieval through simple API calls without leaning heavily on CPU resources.

We’re going to explore how DynamoDB works by designing it for RetoolerStock, our inventory management system. We’ll learn key DynamoDB concepts and apply them step-by-step to build the database for RetoolerStock. This way, we’ll learn by doing.

Let’s begin by outlining the general workflow of the application, and from there, define the access patterns we’ll use in DynamoDB.

A warehouse manager can manually update the inventory of products, including product details and stock levels, either directly or through integration with other services, such as an order management system.

If the stock of a product falls below a defined threshold, the system will automatically trigger an order for a specific quantity from the appropriate supplier and generate a shipping ticket.

Once the goods arrive at the warehouse, the manager can confirm the shipment, update the inventory, and record the restocking event in the inventory history.

Next, we’ll define a list of access patterns to help structure our table properly and ensure optimal query performance.

The first step is to define the main table that will store our product inventory. In DynamoDB, we store data in tables, and each table contains items (similar to rows in relational databases). Each item has attributes (like columns).

DynamoDB uses a primary key to uniquely identify each item in a table. The primary key can be just a partition key (a single attribute) or a composite key (partition key + sort key).

Knowing that NoSQL work in very different philosophy compare to relational database, we need to avoid think about database design like we did in SQL, there are no join operators or foreign key. Usually working with DynamoDB, we only need one table, that called Single Table Database Design. To form one-to-one, one-to-many and many-to-many relationships in only one table, we going to use some patterns, called Item Collections and Adjacency List.

Learn more about supported data types in DynamoDB.

Read more about: Horizontal vs Vertical Scaling, SQL vs NoSQL and Normalization vs Denormalization

One of DynamoDB’s greatest strengths is its schema flexibility. Unlike relational databases where every row must have the same schema, DynamoDB allows each item to have different attributes. This is helpful if different products need different information.

To understand how AWS billed us of using DynamoDB, it's important to understand a few key concepts:

DynamoDB offers two types of consistency when reading data:

In most cases, where real-time updates aren’t essential, eventual consistency is preferred because it offers faster performance.

DynamoDB offers two capacity modes:

An RCU represents the capacity to perform one strongly consistent read per second for an item up to 4 KB in size. For eventually consistent reads, one RCU can handle two reads per second.

A WCU represents the capacity to perform one write per second for an item up to 1 KB in size. If the item size exceeds 1 KB, more WCUs are required for the write operation.

Notice: Even if we only modify a small attribute of a large 20KB item, we will still consume a number of Write Capacity Units (WCUs) equivalent to the entire item’s size.

The number of RCUs and WCUs you use directly impacts the pricing structure in DynamoDB:

If you choose strong consistency for reads, the cost will be roughly double that of eventually consistent reads.

Based on the concepts discussed, here are some best practices for optimizing DynamoDB usage:

DynamoDB automatically shards our table into multiple partitions, distributing them across several servers. This enables horizontal scaling of our database. But how does DynamoDB know which partition to write an item to or retrieve the correct item when the table is distributed across many servers? DynamoDB uses the partition key, which is passed through a hash function to determine the appropriate partition.

A hash function is a mathematical function that takes input (in this case, the partition key) and generates a fixed-size output, which DynamoDB uses to assign the item to a partition.

By default, DynamoDB allows us to query data using only the partition key (and optionally the sort key). Also known as a Query operation.

Query: Since DynamoDB needs the partition key to identify which partition holds the item, you must provide the partition key and, optionally, the sort key to perform a query. A query operation is highly efficient because it directly looks up items using the partition key (and sort key if needed). DynamoDB quickly finds the correct partition and returns only the data you’re asking for, making queries fast and cost-effective.

When filtering data in a query, you are charged for the total size of data scanned before any filtering occurs. For example, if you scan a table and then apply a filter to reduce the results, you will still pay based on the total size scanned, not just the items returned.

However, there are times when we need to retrieve data based on other attributes that aren’t part of the primary key, like searching for suppliers by email or finding products from a specific supplier. Then a scan operation is required.

Scan: A scan checks every item in the table, regardless of the partition key, since it doesn’t know which partition contains the item. This is like a full table search, making scans slow and costly, especially for large tables. Scans should be avoided when performance is critical, unless you’re working with small datasets or specific cases that don’t allow querying.

If you find that a scan suits your use case but performance is a deal-breaker, a Global Secondary Index (GSI) can help. GSIs allow you to query the table using a different partition key and sort key based on non-primary key attributes, improving performance while avoiding the cost and inefficiency of a scan.

Whenever the base table is updated, any affected attributes in secondary indexes also need to be updated, which incurs additional costs. Instead of projecting non-key attributes into indexes, it’s often more cost-effective to perform extra queries on the main table for those attributes. Querying the main table is generally cheaper and offers higher throughput compared to the expense of updating secondary indexes.

Learn more about Secondary Indexes in MongoDB:

When choosing a primary key (Partition Key and Sort Key) for DynamoDB, it’s crucial to follow certain guidelines to ensure efficient data storage and querying.

Cardinality refers to the number of unique values in a key:

When the partition key has high cardinality, it ensures that data can be distributed across many servers effectively. Why?

The same principles apply to GSIs as they do to base tables. If GSIs partition key has low cardinality (few unique values), many writes will be directed to a small number of partitions, creating hotspots. These hotspots can lead to a server becoming overwhelmed, which causes the GSI to throttle. If the GSI is throttled, it can’t keep up with the updates from the base table. Since DynamoDB enforces consistency between the base table and GSIs, this backpressure can propagate back to the base table. As a result, even writes to the base table may start to get throttled, and even slowing down or throttling the entire system.

When choosing a sort key, it depends on your access patterns—how you need to query data. The sort key should support ordering or range queries, such as sorting by date or status.

The real power of DynamoDB comes from combining the partition key and sort key to optimize for your access patterns. You need to consider how you’ll retrieve and access the data in your application. Here’s a step-by-step process:

To do: Insert a video demonstrate how to design the database for RetoolerStock and setup AWS DynamoDB table accordingly.

Video 1: https://drive.google.com/file/d/1nXsjxLOTcPHYfggPwiNqD9kL_wiI17Ww/view?usp=drive_link

Video 2: https://drive.google.com/file/d/1iKPU6Rs_k6mBct7ZRx0n9tRXidCfzfvz/view?usp=drive_link

Ready to bring your dashboard vision to life? Contact Retoolers today, and let us help you create a powerful, intuitive dashboard that meets your exact requirements.

Looking to supercharge your operations? We’re masters in Retool and experts at building internal tools, dashboards, admin panels, and portals that scale with your business. Let’s turn your ideas into powerful tools that drive real impact.

Curious how we’ve done it for others? Explore our Use Cases to see real-world examples, or check out Our Work to discover how we’ve helped teams like yours streamline operations and unlock growth.

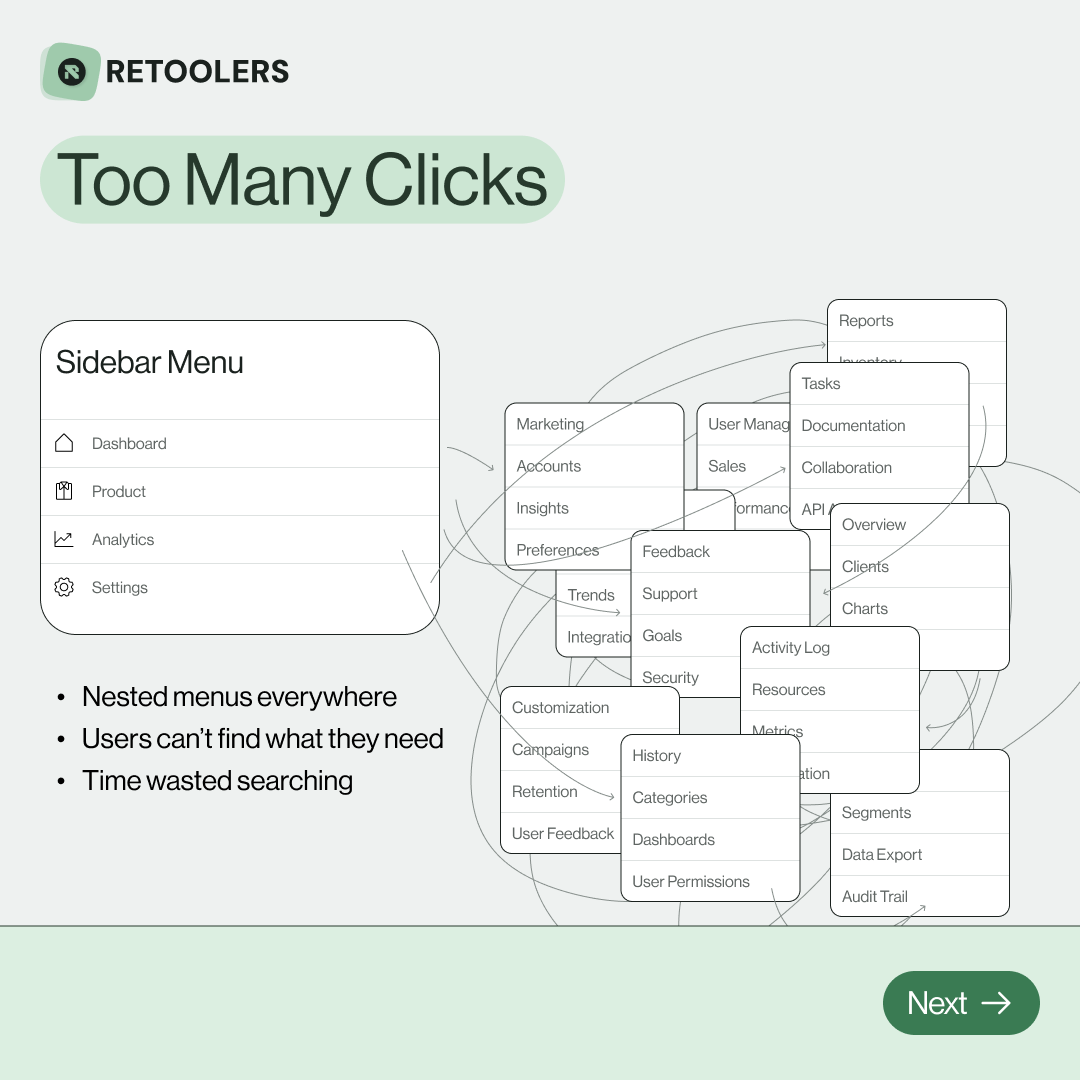

🔎 Internal tools often fail because of one simple thing: Navigation.

Too many clicks, buried menus, lost users.

We broke it down in this 4-slide carousel:

1️⃣ The problem (too many clicks)

2️⃣ The fix (clear navigation structure)

3️⃣ The Retool advantage (drag-and-drop layouts)

4️⃣ The impact (happier teams)

💡 With Retool, you can design internal tools that are easy to use, fast to build, and simple to maintain.

👉 Swipe through the carousel and see how better UX = better productivity.

📞 Ready to streamline your tools? Book a call with us at Retoolers.

🚀From idea → app in minutesBuilding internal tools used to take weeks.

Now, with AI App Generation in Retool, you can describe what you want in plain English and let AI do the heavy lifting.

At Retoolers, we help teams move faster by combining AI + Retool to create tools that actually fit their workflows.

👉 Check out our blog for the full breakdown: https://lnkd.in/gMAiqy9F

As part of our process, you’ll receive a FREE business analysis to assess your needs, followed by a FREE wireframe to visualize the solution. After that, we’ll provide you with the most accurate pricing and the best solution tailored to your business. Stay tuned—we’ll be in touch shortly!